1.-INTRODUCCIÓN

Despite the great improvement achieved in face recognition in the last years, the performance is usually affected under non-controlled scenarios. Pose variations is one of the most challenging problems on this kind of applications and many methods have been proposed to deal with it [1]. Among them, frontal face images synthesis have shown different advantages. For example, it can be applied as a preprocessing step, before any face recognition framework and can be used when only one image per person is available [2,3,4]. However, one of the main disadvantages of these approaches is that most of them are computationally expensive, especially those developed in the last years based on deep learning [5,6,7]. Efficient methods are needed for real-life applications. It has been shown that deep learning based methods still have some problems to be used in practical applications [8].

Recently, an efficient and effective frontalization method was proposed in [9]. The method is based on the 3D Generic Elastic Model (3DGEM) approach and efficiently synthesized a 3D model from a single 2D image. In this work we aim at applying that scheme to profile face images. For doing this, first was used a method to determine if the input image is a right or a left profile. Then, a method for the automatic detection of landmark points in profile images is introduced and then the correspondence between the detected points and the 3D model is established, in order to initialize and effectively recover the information of the occluded part of the face.

In order to present and analyze the proposal, the rest of this paper is divided as follows: section 2 reviews the related work; Section 3 gives a general description of the proposed method; later, in Section 4, experimental evaluation is conducted in order to demonstrate the effectiveness of the method; and finally, conclusions and ideas for future work are presented in Section 5.

2.- RELATED WORK

The process of synthesizing a frontal image from a profile face image has two main steps: 1) profile face landmarks detection and correspondence, and 2) 2D-3D face modeling and rendering.

2.1.- PROFILE FACE LANDMARKS DETECTION

Although face landmark detection has been significantly improved in the last years, it remains a difficult problem for facial images with severe occlusion or large head pose variations. Recently the Menpo benchmark was released [10] to conduct a Landmark Localization Challenge not only on nearly frontal images but also on profile face images. Seven methods from the eight participants in the challenge [10] were based on deep learning approaches and thus they need a large amount of training images as well as computational resources.

Besides the neural network approaches there are just a few works proposed for detect landmark in profile faces [11,12]. All of them are based on Cascaded Shape Regression, which need also a large amount of training data and have a high computational cost. On the contrary, generative PCA-based shape models have not been used for this purpose. They are in general efficient and have demonstrated to be effective [13].

2.2.- 2D-3D FACE MODELING AND RENDERING

There is a large number of methods that are able of generating a 3D face model from a single 2D image [3,4,5,1]. In particular, 3D Morphable Models (3DMM) [14] has reported the synthesis of high quality 3D face models using a single input image. In the last years, several works have been proposed in order to improve this technique. Among them, the 3D Generic Elastic Models (3DGEM) [15], is one of the methods that achieves acceptable visual quality with a high performance. Different works have been then developed in order to improve 3DGEM’s synthesis quality [16] and its expression-robust property [17]. The improvement of its performing time is another research topic for this technique [16]. Recently, some modifications on the steps of the original 3DGEM approach were proposed in [9] in order to make it more efficient. The proposal exhibited a speed-up of 38x with respect to the original 3DGEM method, and achieved state-of-the-art results in LFW database.

Other approaches different from 3DGEM have been proposed, but in general they are also time consuming. For example, in [18] a person-specific method is proposed by combining the simplified 3DMM and the Structure-from-Motion methods to improve reconstruction quality. However, the proposal incurs in a high computational cost and requires large training data. Other methods with very good quality results combine elements of 3DMM with convolutional neural networks [5,7], but as any deep learning approach, requires a large amount of training images from different persons in different poses, and are time consuming not only in training but also during testing.

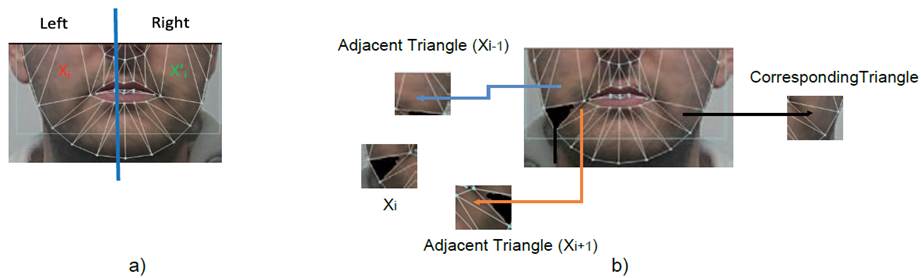

Since we are looking for an efficient method, we have chosen the 3DGEM variant proposed in [9], which also shows to effectively recover the information of occluded regions. In that work missing regions were filled using opposite and adjacent regions (see Figure 1). This strategy should be modified for frontalizing profile faces, since in this case most of adjacent regions of occluded regions are also occluded.

3.- PROPOSED APPROACH

The pipeline of the proposed method is shown in Figure 2. First, the face is detected and the method proposed in [19] is used to determine if the face is a left or a right profile, and the corresponding model (right or left) is then used to obtain the landmark points with an Active Shape Model (ASM) based method. Once the landmark points in the profile image are detected, the correspondence between these points and a 3D mesh is established and a subset of them are used as reference to deforming the mesh according the input image. The 3D mesh is finally frontalized and the face image appearance is incorporated.

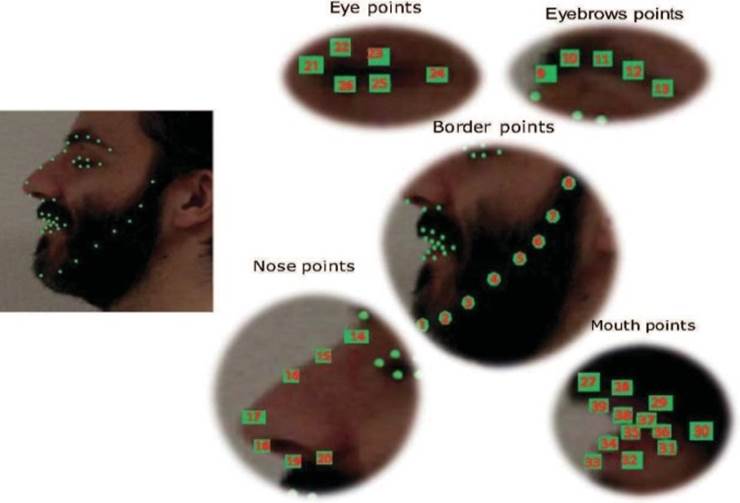

The landmarks detection method based on ASM that was selected was the EP-LBP shape model [20], which proved to be efficient and easy to modify in training to obtain an effective detection of landmark points. For profile facial landmarks detection a model with 39 points was trained. The distribution of the landmarks is defined by: Contour of the face (8 points), eyebrows (5 points), edges of the nose (7 points), contour of the eye (5 points) and edges of the mouth (13 points). For a better understanding, the distribution is shown in Figure 3. Two models were obtained, one for left profile and another for right profile; each of them was trained with 100 profile images taken from internet where the 39 landmarks were manually annotated.

In the original approach [9] the 3D dense mesh is learnt in the training stage (from frontal images). Later, on the online stage, 14 landmark points are used as reference and bounded biharmonic deformations are applied in order to deform the 3D mesh in an efficient and accurate way. Thus, a correspondence between the 39 points defined for the profile face and the points of the 3D model obtained during training is established. The face symmetry is taking into account to deform the complete model and frontalize it.

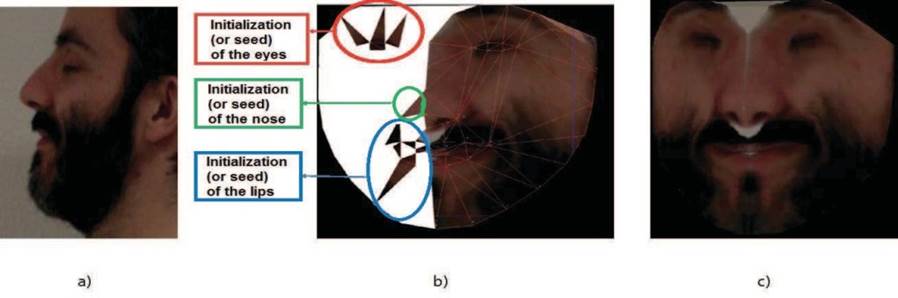

The correspondence between the 2D and 3D points is also used to extract the face appearance. A triangulation of the 2D mesh is used in order to make it denser and achieve a greater correlation with the 3D model. Through this process the triangles from the original image that contain the appearance of the face are obtained. In the case of profile images only is available the appearance for one side of the face and then one part of the model does not contain any appearance. As it was seen before, the missing regions in this case can not be filled using opposite and adjacent regions, since most of the adjacent regions have not any information. Then it is proposed to mirror only the regions of the edges of the eyes, nose and lips and use them as seeds to do the reconstruction for the other regions as is illustrated in Figure 4.

Figure 4 Process of obtaining the face appearance for the occluded face part. In a) input image. In b) image mapped on 3D model and the seeds on the region where no information is found. In c) reconstruction of the frontal image.

By using the seeds for initialization an acceptable image reconstruction from a profile can be obtained, that has a great loss of information. Although visually it is not so pleasant, it has a good identification value as we will show in the experimental evaluation section.

4.- EXPERIMENTAL EVALUATION

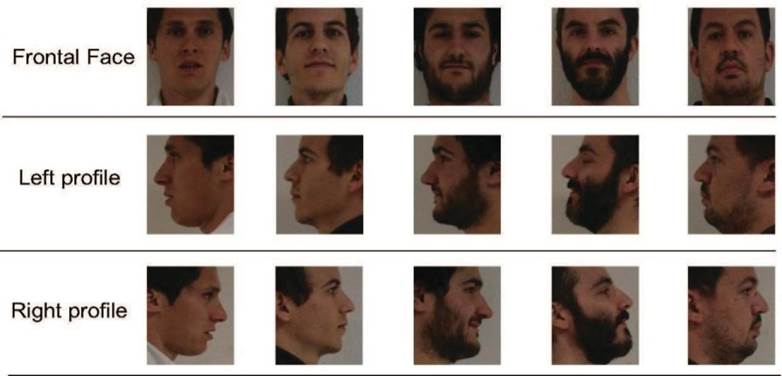

In order to evaluate the proposal, experiments were conducted in two databases. The first one was ICB-RW database [21], which is one of the few databases that has both profile and frontal face images of the same subjects. It contains 270 images from 90 subjects, which are divided into 3 groups of 90 images, left profile, right profile and frontal image. Sample images from different subjects are shown in Figure 5.

The second database used was Celebrities in Frontal-Profile in the Wild (CFPW) [22], which contains 5000 frontal images and 2000 profile ones, from a set of 500 subjects. The evaluation protocol is based on 10 splits of the database for profile vs. frontal images comparison. Sample images from different subjects are shown in Figure 6.

The importance of face frontalization is shown by comparing its performance on face identification w.r.t the use of the original profile images in the matching. In addition, the proposal is compared with one of the few state-of-the-art frontalization methods that can be applied to profile face images, proposed by Hassner in [4]. For these experiments it was used a state-of-the-art face recognition method based on a ResNet, provided in the DLib library [23]. The obtained results in terms of Recognition Rates at rank 1 are shown on Table 1 and Table 2.

Table 1 Recognition results in ICB-RW using the DLib face recognizer

| Type of Comparison | Accuracy (%) |

|---|---|

| Profile vs. Front Gallery | 54.11% |

| Hassner frontalization [4] vs. Front Gallery | 85.35% |

| Proposed frontalization vs. Front Gallery |

|

Table 2 Recognition results in CFPW using the DLib face recognizer

| Type of Comparison | Accuracy (%) |

|---|---|

| Profile vs. Front Gallery | 86.33% |

| Hassner frontalization [4] vs. Front Gallery | 87.01% |

| Proposed frontalization vs. Front Gallery |

As can be seen from the table, there is a great improvement in performance when the profile images are frontalized with the proposal. Even with a very good face recognition method which have obtained more than 99% of accuracy in benchmark databases, it is not possible to reliable match profile images against frontal mugshots, and the frontalization method shows to help in a great margin.

It should be noticed that the proposed method is an extension of the efficient method proposed in [9]. The modifications made in terms of the distribution of the landmark points and the use of the initialization regions do not introduce additional processing. Hence, efficiency is maintained with respect to the original method, extending its use to efficiently frontalize profile face images.

5.- CONCLUSION

In this paper an efficient method for face profile images frontalization was presented. The proposal is based on a modified version of the 3DGEM approach which perform efficiently the online stage. The proposal includes a new profile face landmark detector and modifies the step of filling the missing regions in order to recover the half part occluded from the face. The proposal was evaluated in ICB-RW and CFPW databases and the results were better than those of an existing state-of-the-art approach. It was also shown the impact of face frontalization on the performance of face recognition systems.