Mi SciELO

Servicios Personalizados

Articulo

Indicadores

-

Citado por SciELO

Citado por SciELO

Links relacionados

-

Similares en

SciELO

Similares en

SciELO

Compartir

Revista Cubana de Ciencias Informáticas

versión On-line ISSN 2227-1899

Rev cuba cienc informat vol.12 no.3 La Habana jul.-set. 2018

ARTÍCULO ORIGINAL

A new system for human movement induction based on virtual reality

Un nuevo sistema para inducción de movimiento humano basado en realidad virtual

Lic. José Moráguez Piñol1, Lic. Elías Trenard García2*, Dr.C. Carlos Díaz Novo3

1Facultad de Ciencias Naturales y Exactas, Universidad de Oriente, Santiago de Cuba. Calle 11 # 253, Reparto Vista Alegre, Santiago de Cuba, Cuba. Código Postal: 90400. jmoraguez@uo.edu.cu

22 Dpto. de Bioinformática, Centro de Biofísica Médica, Universidad de Oriente, Santiago de Cuba. Bartolome Maso No. 363, Apto. E, entre Carnicería y San Félix, Santiago de Cuba, Cuba. Código Postal: 90100. eliastrenard@gmail.com

3Dpto. de Bioingeniería, Centro de Biofísica Médica, Universidad de Oriente, Santiago de Cuba. Calixto García No. 425, esquina Corona, Santiago de Cuba, Cuba. Código Postal: 90100. cdiaznovo@yahoo.es

*Autor para la correspondencia: eliastrenard@gmail.com

ABSTRACT

Nowadays there is a variety of new technologies for physical exercising and motor rehabilitation such as: serious games, natural user interfaces, and augmented reality. However, the application of these technologies is insufficient compared to traditional methods. For this reason, this paper discusses the design, implementation and testing of a human movement induction system based on Virtual Reality. The system offers three types of movements: two for the upper limbs, and one for the lower limbs. Each movement can be customized to the subject’s characteristics, in order to develop biomechanical parameters such as speed, and skills such as coordination and balance. The proposed system allows audio-visual feedback, automatic supervision and result analysis; providing service and research support. Compared to similar systems, this design brings innovations such as: the combination of Kinect and Google Cardboard, the indirect measurement of direction and motion speed, and the previsualization of kinematics parameters.

Key words: audio-visual feedback, Google Cardboard, human movement, induction, Kinect, supervision, virtual reality.

RESUMEN

En la actualidad existen nuevas tecnologías para la ejercitación física y la rehabilitación motora como: Los juegos serios, las interfaces naturales de usuario y la realidad aumentada. Sin embargo, la aplicación de estas tecnologías es insuficiente respecto a los métodos tradicionales. Por estas razones se propone el diseño, implementación y prueba de un sistema de inducción de movimiento humano basado en Realidad Virtual. Dicho sistema ofrece tres tipos de movimientos: dos para miembros superiores y uno para miembros inferiores. Cada movimiento puede ser personalizado según las características del sujeto, para desarrollar parámetros biomecánicos como la velocidad y habilidades como la coordinación y el equilibrio. El Sistema propuesto permite la retroalimentación audiovisual, la supervisión automática y el análisis de resultados; brindando soporte para servicio e investigación. Comparado con sistemas similares, este diseño aporta innovaciones como: la combinación de las tecnologías Kinect y Google Cardboard, la medición indirecta de la velocidad y dirección del movimiento, y la visualización previa de parámetros cinemáticos.

Palabras clave: Google Cardboard, inducción, Kinect, movimiento humano, realidad virtual, retroalimentación visual-auditiva, supervisión.

INTRODUCTION

Physical activity (PA) is an important intervention to prevent, control and treat sedentary life styles and motor disorders. Traditional PA involves gym, sports and heath care environments; but it can also be achieved through the incorporation of new technologies. Virtual Reality (VR) technologies are an alternative to induce human movements. This is an attractive way to bring physical exercise to our increasingly technophilic society. It is known that sedentary life styles, physical inactivity problems and motor disorders among children and elder persons, can be treated with these technologies (K. O’Loughlin et. al., 2012) (Maillot et. al., 2012) (Corbetta et. al., 2015).

The configuration and control of VR allows to establish interactive contexts for the subjects, based on the manipulation of biomechanical time-space parameters. Automatic monitoring of these environments allows quantifying and storing the subject’s progress and evolution time. The evolution time and the direction and speed of the motion are biomechanical descriptors of motor recovery and performance (Peñasco-Martín et. al., 2010) (Corbetta et. al., 2015) (De Visser et. al., 2000).

Several studies demonstrate the relative impact of some systems like serious games and interactive games consoles in the motor function recovery process, recommending the necessity of increasing the flexibility of these systems (Luque-Moreno et. al., 2015) (Pachoulakis et. al., 2015). However, an objective planning based on evidences will be helpful during the evaluation of the performance in time (Ling Chen et. al., 2016).

The systems eBaViR, TOyRA, Removiem and CuPiD have been previously validated (Gil-Gómez et. al., 2011), (Gil-Agudoa et. al., 2012), (Lozano-Quilis et. al., 2014), (Rocchi, 2013). These are based on the Kinect sensor, serious games, inertial sensors, and audio-visual feedback. A prototype for shoulder rehabilitation has also been tested using Oculus Rift DK2 and Intel RealSense (Baldominos et. al., 2015). Such solutions have proved to be evidences of alternatives to traditional exercise, conventional rehabilitation and robotic assisted therapies (Hidler et. al., 2005). Another relevant work on this matter is: “The gait rehabilitator for treadmill of Julio Diaz Rehabilitation Center” (Pérez Villamil et. al., 2016). This system guides the patient through the projection of footprint images on the treadmill. However, it does not include feedback or automatic supervision during the movement.

VR based exercises deliver real time feedback to the subject, and seem to be flexible to take advantage of the expert guidance to evaluate the application of specific interventions (Sveistrup, 2004) (Peñasco-Martín et. al., 2010) (Corbetta et. al., 2015). Nowadays, VR exercising is still insufficient regarding traditional methods, and could be improved to deliver a better service (Pachoulakis, et. al, 2015). This research aims at designing, implementing and testing a human movement induction system based on VR, and supports research on its future applications on exercising and rehabilitation.

MATERIALS Y METHODS

The design of the system included a poll that involved professionals of rehabilitation services, and experts in the study of human movements. This information was used to design the VR scenes that induce simple motor activities.

To test the zero version of the system a control group of fourteen healthy subjects was used, including: 3 females and 11 males, with ages from 14 to 48 years old.

To develop the proposed system, the following software development tools were used: Visual Studio Ultimate 2012, C#, Unity 3D version 5.2 (Free version), Android SDK version 23, Android NDK version 10b, Microsoft Access 2010 version 14.0.4760, .NET Framework version 4.5, GIT version 2.6.3.windows.1, TortoiseGIT version 1.8.16.0, Kinect SDK version 1.8 and iTextSharp library version 5.5.9.0.

The hardware used was: Kinect for XBOX-360, Google Cardboard version 2, Wireless Dual Band Gigabit Router 2.4GHz; computer Intel Core i5-3340 CPU 3.10GHz, 4GB RAM, 1TB Hard Drive, USB 2.0 and Windows 7 Ultimate (64 bits). The smartphone BLU with and Android version 4.4.2, Dual-core 1.3 GHz, 512 MB RAM, Wi-Fi 802.11, with the following sensors: accelerometer, light and proximity. The tablet Samsung, with Android version 4.4.2, 1.2GHz Quad-Core, 1.5 GB RAM, 16 GB Hard Drive; Wi-Fi 802.11; with accelerometer.

System workflow

To conceive the proposed system, the main requirements were: the necessity to configure and supervise movements. These principles allowed defining the system workflow, composed by the following functionalities: (1) Selection and configuration of movements, (2) Planning, (3) Execution of the session, (4) Results analysis, and (5) Results exportation. These functionalities give support for health care and training service, allowing the use of the system in research.

RESULTS

Defined movement types

Three types of movements were defined considering the motion induction, the human body limbs, and the supervision and visualization of kinematical parameter, Table 1. Every movement induces the execution of motor strategies, including the least cognitive component possible. Motion speed and skills such as agility, balance and coordination can be developed with these activities.

Movement induction is a sequence of changes provoked by elements of the VR scene. Every change is an event, previously configured with kinematic parameters (Configuration categories of Table 1). In the “Objects Evasion Movement”, the event consists of moving an object towards the subject Fig. 1.c. In the rest of the movements, the event consists of the illumination of a sphere Fig. 1.a, 1.b. The resulting configuration of all events defines the movements complexity, the required motion speed; and it is used to supervise the subject.

The movements supervision is based on successes or failures. A success is produced for every event, when the subject achieves the movement objective. In the “Objects Evasion Movement”, a success occurs when the subject evades the object and returns to the initial position. In the other two movements (Table and Frontal Panel) a success occurs when the subject touches with his or her hand the lightened sphere and returns that hand to the initial position. Any other behavior is interpreted as a failure.

The user configures an events sequence for the subject, and defines the number of times he must execute the sequence (Luque-Moreno et. al., 2015). Every execution is a session, consisting of three stages in the following order: Warm-up, Work, and Relaxation. The Warm-up and Relaxation stages set the subject, and the supervision is performed in the Work stage. For this reason, the mayor complexity and exigency must be in the Work stage. The result of a session is a sequence of success and failure, corresponding to the sequence of pre-configured events.

The motion induction is achieved by projecting the VR scene in a visual immersion helmet (Fig. 1). The subject is represented through an avatar, and his or her interaction with the scene allows the supervision based on collision detection. The avatar is real time animated by the subject with the skeleton coordinates acquired by the Kinect sensor. These coordinates are transmitted to the helmet. Therefore, the subject avatar limbs have the same degree of freedom as the Kinect skeleton limbs, obtaining an excellent visual feedback during the exercise.

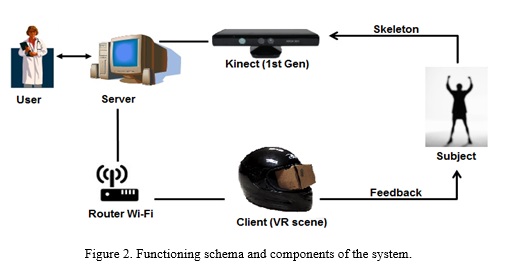

It is necessary for the subject to execute the movements without any wire ties, in a space of 3m of width and no more than 3.5m away from the Kinect sensor in normal mode (Catuhe, 2012) (Giorgio & Fascinari, 2013). It is also necessary to exchange information between an Android mobile device and a computer with a Windows Operating System. Such information includes the movement’s settings, the VR scene control, the supervision results, and a skeleton composed by 20 coordinates acquired by the Kinect sensor at 30 frames per seconds (Jana, 2012). These requirements make the wireless network ideal to maintain the communication during the movement execution. The real time acquisition of human movements, the setting, the data storage, and the supervision, are tasks that can be distributed. For these reasons, this system is based on Client-Server architecture. The Server application is executed in the computer, and the Client application is executed in a mobile device attached to a visual immersion helmet Fig. 2. The Client-Server architecture has additional benefits. This architecture made it possible to use the computer power of the mobile device; and use a first generation Kinect that does not have an official SDK for Unity Game Engine (Pedraza-Hueso et. al., 2015).

The first person audio-visual feedback is achieved by combining Kinect and Google Cardboard (GC) technologies. The VR scenes that induce the movements are executed in a 7’’ Tablet or a Smartphone in the GC. These components are attached to the helmet (Fig. 2). GC provides the visual immersion and movement freedom, due to its capacity to hold the mobile device with Wi-Fi connection support, energetic independence and audio output. As the VR scene is executed in the helmet, the network data traffic consists of the Kinect skeleton coordinates, and the scene supervision and control data.

The system software components are: The Client and Server applications. The design of these applications was distributed in modules. The Server application contains the modules: Kinect Management, Configuration, Evolution Analysis, and Database Management. As well, the Client application contains the modules: Wi-Fi Connection, VR Scenes and Supervision

Implemented System

The system stores the information in a flat file database of Microsoft Access. Each user creates his or her database and executes the system workflow. The Microsoft Access files allow the user to work in any computer the system is installed on. For this purpose, two types of users were defined: “Administrator” and “Standard”. The Administrator user is unique, it is the database creator and manages the Standard users. A security layer is thus achieved to protect the data patrimony.

The use cases of the system include the workflow and the database management. These functionalities are executed from the Server application’s main interface Fig. 3.

The three configuration interfaces allow a detailed description of movements sequence, the definition of their complexity and the number of sessions. Each interface specializes its design in the central panel, according to the kinematic parameters of the event Fig. 4. With these interfaces the user can customize the movements for the subject (Luque-Moreno et. al., 2015) (Pachoulakis et. al., 2015).

Each configuration interface includes a preview functionality. This feature reproduces the behavior of the kinematic parameters of every configured event. This visualization allows the user to estimate the configuration suitability, preventing a physical overload of the subject.

Three Evolution Analysis interfaces are also specialized for the kinematic parameter of the movement induction events. These interfaces can be set for success or failure, and include a time graphic that visualizes the executed and planned sessions. Each interface allows defining the interval of sessions to analyze, and it is also possible to visualize the results of a specific session. All these functionalities allow the analysis of the subject’s performance, based on the indirect measures of direction and movement speed.

The export feature generates documentation that contains the current Evolution Analysis and result graphics. The PDF exportation generates a report that includes: the subject data, the planning information, and the result graphics. This document is very useful for doctors, trainers and biomechanics experts. CSV exportation is meant for researches. This functionality creates a directory that contains: a CSV file for each graphic and a TXT file with information of the subject and the planning.

Test with healthy subjects

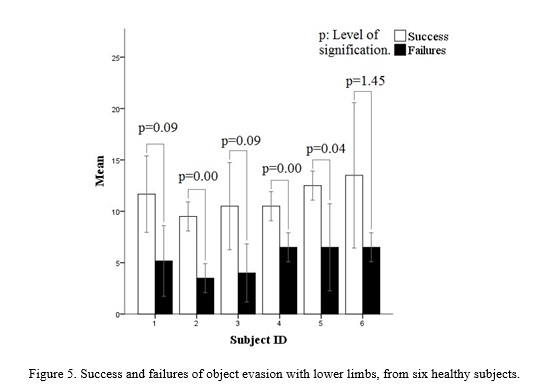

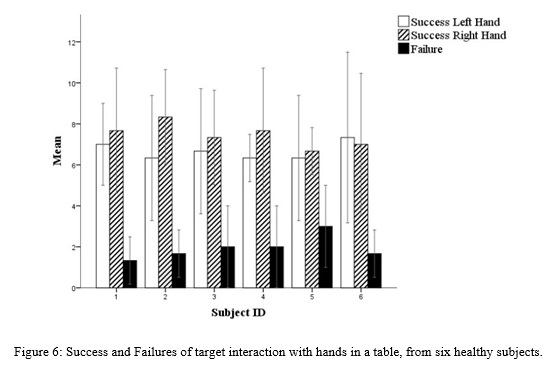

The functioning of the version zero of the system was tested with fourteen healthy subjects. The results of the test were exported to CSV format, with the previously described feature. The following graphics were generated with a statistic tool, and they show results from the object evasion for lower limbs (Fig. 5), and both hands interaction in a table for upper limbs (Fig. 6). As expected, the results show more success due to the healthy condition of the subjects.

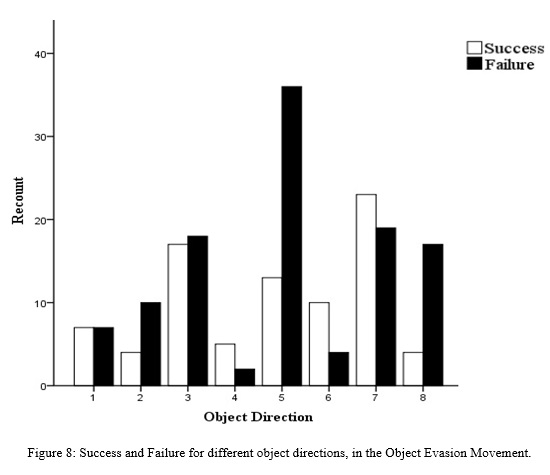

It is also proved that the movement’s complexity determines the results. Fig. 7 shows that, in the Object Evasion movement, the success is directly proportional to the Distance Between Objects. A short distance between objects demands more movement speed than a longer one. Fig. 8 as well, shows more failures in the Object Direction number five (Center of the body, Fig. 6.c). An object heading towards the body center has to be dodged with larger steps, demanding more effort from the subject. Therefore, it is possible to measure the speed and movement direction indirectly.

DISCUSSION

In systems alike the following similarities are observed: the VR application, the Kinect sensor, audio-visual feedback, employment of avatars, the kinematic parameters control, and the low cost hardware utilization (Gil-Gómez et. al., 2011) (Gil-Agudoa et. al., 2012) (Lozano-Quilis et. al., 2014) (Pérez Villamil et. al., 2016). Other similarities are the configuration, planning and report features. The minimum requirements of the presented system include common and accessible hardware like the Kinect sensor, a mobile device, a Wi-Fi Router, and a GC. These requirements, and the automatic supervision, allow the system to be adapted to the subject’s home. This advantage is an important similarity with In-Home systems like CuPiD and ReaKinG (Rocchi, 2013) (Pedraza-Hueso et. al., 2015).

Some of the previously mentioned systems differ from the proposed one in the measurements precision, the application of other technologies and the visual immersion. TOyRA (Gil-Agudoa et. al., 2012) and CuPiD (Rocchi, 2013) perform more precise kinematic measurements using wearable devices and inertial sensors. These measurements quantify the range of movement (ROM) of the shoulder, elbow and carpal (Gil-Agudoa et. al., 2012). This information is also used to animate the avatars (Gil-Agudoa et. al., 2012) (Rocchi, 2013). However, the use of the Kinect sensor is intuitive, no complex calibration or additional equipment is required. The proposal of this Kinect based system allows the indirect measurement of the motion direction and speed, based on success and failure. Such parameters can be evaluated, through the result analysis features of the proposed system, to determine the optimum frequency and intensity of the exercise (Ling Chen et. al., 2016). This evaluation provides the user evidence to adjust the movements of the subject through the configuration features.

The above mentioned systems use screens to accomplish the immersion in the VR or the Augmented Reality (AR) (Lozano-Quilis et. al., 2014). These are visual immersions in third person, they require additional familiarization and might be susceptible to distractions. The current system proposes a visual immersion in first person with a VR viewer, protecting the subject with a helmet in case of a fall (Pachoulakis et. al., 2015). The proposed immersion is intuitive, gives more motivation and avoids the distractions during the movement execution. There is a system that rehabilitates one shoulder using a therapy based on the Oculus Rift DK2 and Intel Real Sense motion sensor, providing feedback with a one hand avatar (Baldominos et. al., 2015). However, the system presented brings a similar viewer immersion, a better avatar feedback, movement induction for both upper and lower limbs, with support for service and research.

CONCLUSIONS

It was possible to design, implement and test a system for human movement induction based on virtual reality. Such system brings a design innovation combining Kinect and Google Cardboard. The successful test proved the possibilities to deliver evidences, and perform indirect measurements of the direction and movement speed. Such evidences will allow making quantitative studies, in order to evaluate the future application of the system for exercising and rehabilitation.

ACKNOWLEDGEMENTS

Special thanks to the Human Movement Analysis Laboratory of the Medical Biophysics Center and the Neurophysiology Department of Santiago de Cuba General Hospital.

REFERENCES

Baldominos, A., Saez, Y., & García del Pozo, C. An approach to physical rehabilitation using state-of-the-art virtual reality and motion tracking technologies. Procedia Computer Science, 2015, (64), 10-16.

Catuhe, D. A bit of background. Programming with the Kinect for Windows Software Development Kit. Redmon, Washington: Microsoft Press, 2012. p. 5.

Corbetta, D., Imeri, F., & Gatti, R. Rehabilitation that incorporates virtual reality is more effective than standard rehabilitation for improving walking speed, balance and mobility after stroke: a systematic review. Journal of Physiotherapy, 2015, (61), 117–124. Recuperado el 16 de September de 2016, de http://www.sciencedirect.com/science/article/pii/S1836955315000569

De Visser, E., Mulder, T., Schreuder, H., Veth, R., & Duysens, J. Gait and electromyographic analysis of patients recovering after limb-saving surgery. Clinical Biomechanics, 2000, (15), 592-599.

Gil-Agudoa, A., Dimbwadyo-Terrerb, I., Peñasco-Martínc, B., de los Reyes-Guzmánc, A., Bernal-Sahúnd, A., & Berbel-García, A. Experiencia clínica de la aplicación del sistema de realidad TOyRA en la neuro-rehabilitación de pacientes con lesión medular. Rehabilitación, 2012, 46(1), 41-48. Recuperado el 5 de September de 2016, de http://www.elsevier.es/es-revista-rehabilitacion-120-articulo-experiencia-clinica-aplicacion-del-sistema-S0048712011001629?redirectNew=true

Gil-Gómez, J. A., Lloréns, R., Alcañiz, M., & Colomer, C. (2011). Effectiveness of a Wii balance board-based system (eBaViR) for balance rehabilitation: a pilot randomized clinical trial in patients with acquired brain injury. [Online]. BioMed Central. [Consulted on September 16, 2016], Available in: https://jneuroengrehab.biomedcentral.com/articles/10.1186/1743-0003-8-30

Giorgio, C.; Fascinari, M. Hardware Overview. Kinect in Motion – Audio and Visual Tracking by Example. Birminghan-Mumbai: Packt Publishing, 2013. p. 11.

Hidler, J., Nichols, D., Pelliccio, M., & Brady, K. Advances in the Understanding and Treatment of Stroke Impairment Using Robotic Devices. Top Stroke Rehabil, 2005, 12(2), 22-35.

Jana, A. Human Skeleton Tracking. Kinect for Windows SDK Programming Guide. Birmingham-Mumbai: Editorial, 2012. p. 158-211.

K. O’Loughlin, E., N. Dugas, E., M. Sabiston, C., & L. O’Loughlin, J. Prevalence and Correlates of Exergaming in Youth. PEDIATRICS, 2012, 130(5). doi:10.1542/peds.2012-0391

Ling Chen, Wai Leung Ambrose Lo, Yu Rong Mao, Ming Hui Ding, Qiang Lin, Hai Li, . . . Dong Feng Huang. [Online] Effect of virtual reality on postural and balance control in patients with stroke: a systematic literature review. BioMed Research International, 2016, [Consulted on December 5, 2016]. Available in: de http://downloads.hindawi.com/journals/bmri/aip/7309272.pdf

Lozano-Quilis, J.-A., Gil-Gómez, H., Gil-Gómez, J.-A., Albiol-Pérez, S., Palacios-Navarro, G., M Fardoun, H., & Mashat, S. A. Virtual Rehabilitation for Multiple Sclerosis Using a Kinect-Based System: Randomized Controlled Trial. [Online] PubMed Central®, 2014, [Consulted on September 5, 2016]. Available in: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4307818/

Luque-Moreno, C., Ferragut-Garcías, A., Rodríguez-Blanco, C., Heredia-Rizo, A. M., Oliva-Pascual-Vaca, J., Kiper, P., & Oliva-Pascual-Vaca, Á. A Decade of Progress Using Virtual Reality for Poststroke

Lower Extremity Rehabilitation: Systematic Review of the Intervention Methods. [Online] BioMed Research International, 2015, [Consulted on August 31, 2017]. Available in http://dx.doi.org/10.1155/2015/342529

Maillot, P., Perrot, A., & Hartley, A. Effects of interactive physical-ativity video-game training on physical and cognitive function in older adults. Psychology and Aging, American Psychological Association, 2012, 27(3), 589-600. [Consulted on August 31, 2017] Available in: https://hal.archives-ouvertes.fr/hal-00736985

Pachoulakis, I., Papadopoulos, N., & Spanaki, C. PARKINSON’S DISEASE PATIENT REHABILITATION USING GAMING PLATFORMS: LESSONS LEARNT. International Journal of Biomedical Engineering and Science (IJBES), 2015, 2(4).

Pedraza-Hueso, M., Martín-Calzón, S., Díaz-Pernas, F., & Martínez-Zarzuela, M. Rehabilitation using Kinect-based Games and Virtual Reality. Procedia Computer Science, 2015, 75, 161 – 168.

Peñasco-Martín, B., De los Reyes-Guzmán, A., Gil-Agudo, A., Bernal-Sahún, A., Pérez-Aguilar, B., & De la Peña-González, A. Aplicación de la realidad virtual en los aspectos motores de la neurorrehabilitación. Rev Neurol, 2010, (51), 481-8.

Pérez Villamil, A., & Zamarreño Hernández, J. Rehabilitador de Marcha para cinta rodante del Centro Nacional de Rehabilitación Julio Díaz. Serie Científica de la Universidad de las Ciencias Informáticas, 2016, 9(3), 135-148.

Rocchi, L. (22 de October de 2013). Joinup. [Consulted on August 29, 2016], de https://joinup.ec.europa.eu/community/epractice/case/cupid-closed-loop-system-personalised-and-home-rehabilitation-people-parkin

Sveistrup, H. Motor rehabilitation using virtual reality. J NeuroengRehabil, 2004, 1:10.

Recibido: 27/10/2017

Aceptado: 06/07/2018