Introduction

Skin cancer is the most common human cancer; its incidence has been constantly increasing for several decades. Exposure to UV light is the leading cause of skin cancer (Sun et al, 2020). In recent years, skin cancer has experienced a worrying increase in the world. The World Health Organization (WHO) estimates that two to three million benign cases of skin cancer and approximately 132,000 malignant melanomas occur each year worldwide. Cancer is one of the leading causes of death in humans. According to the Health Organization statistics, it is predicted that cancer will be the leading cause of death (13.1 million) by 2030. Among all types of cancer, skin cancer is the most common form of cancer in the United States. Based on projections, 20% of Americans will have skin cancer in their lifetime (Emre et al,.2007; Kenet et al,.1994).

The human body is made up of living cells that grow, divide into new cells and die. Cell division is a continuous process in the human body and replaces dead cells. However, abnormal cell growth and uncontrollable cell division are the causes of cancer.

The three main types of skin cancer are basal cell carcinoma (BCC), squamous cell carcinoma (SCC) and melanoma. Basal cell carcinoma (BCC) and squamous cell carcinoma (SCC) are also called non-melanoma skin cancer or keratinocyte cancers. Rare types of non-melanoma skin cancer include Merkel cell carcinoma and angiosarcoma. They are treated differently from BCC and SCC. Basal Cell Carcinoma (BCC) starts in the basal cells of the epidermis. It accounts for about 70% of non-melanoma skin cancers. BCC grows slowly over months or years and rarely spreads to other parts of the body. The earlier a BCC is diagnosed, the easier it is to treat. If left untreated, it can grow deeper into the skin and can damage nearby tissues, making treatment more difficult. Having one BCC increases the risk of getting another. It is possible to have more than one BCC at the same time on different parts of the body.

Melanoma is the most lethal kind of skin cancer (Adegun et al.,2020). It starts in the melanocytic cells of the skin. It accounts for 1 to 2% of all skin cancers. Although melanoma is a less common type of skin cancer, it is considered the most serious because it grows quickly and is most likely to spread to other parts of the body, such as the lymph nodes, lungs, liver, brain and bones, especially if not found early. The earlier melanoma is detected, the more likely it is to be successfully treated. Melanoma is one of the deadliest and fastest growing types of cancer in the world.

This work is related to our work in machine learning and big data (Naoui et al, 2020) in which we proposed mutlilayer architecture for integration of machine learning with Big data system.

Skin cancer detection has attracted the attention of many researchers:

(Ichim et al,.2020) proposed melanoma detection using an objective system based on multiple connected neural networks. The system is composed of two hierarchical levels, subjective and objective. The subjective level extract data characteristics of lesions. The objective level learns from subjective level with back-propagation perception and make final decision (melanoma or not melanoma). (Pollastri,.2020) proposed the data augmentation of skin lesion by generative adversarial networks (GANs) model. Authors augmented both skin lesion and their segmentation image. The application of this approach tests two algorithm convolution GAN and Laplacian GAN. (Mazoure et al,.2022) presented a web server architecture for deep skin cancer uncertainty analysis (DUNEScan) based on convolution neuronal network. (Fu et al,.2022) presented a method for melanoma diagnosis. In the first stage images are preprocessed. The second stage consists of segmented the interest region base on kernel fuzzy C-mean method. The third stage optinally extract the main characteristics of the image. Finally, authors proposed multi-layer perception for skin cancer classification. (Reis et al,.2020; Agrahari et al, 2022) proposed deep convolution neuronal networks to skin detection. (Reis et al,.2020) for deep learning segmentation. (Lakshminarayanan et al, 2022) compared between convolution neuronal networks, AdaBoosting, Gradient Boosting and Decision Tree algorithms to detect skin cancer. (Kadampur et al,.2020) presented a tool in which a non-programmer can develop complex deep learning models. It opened up options for flexibility in the design of deep learning classifiers by hinting at general procedures and loop patterns in the development of deep learning models. Although dermoscopy improves the visual perception of a skin lesion, automatic recognition of melanoma from dermoscopic images remains a difficult task because it has several challenges. First, the low contrast between skin lesions and normal skin makes it difficult to accurately segment lesion areas. Second, melanoma lesions and normal skin may have a high degree of visual similarity, resulting in difficulty distinguishing melanoma lesions from normal skin. Third, variation in skin conditions, e.g., skin color, natural hair or veins, in patients produce a different appearance from melanoma, in terms of color and texture, etc. Early detection is important to increase the life expectancy up to 98% against 17% of the diagnosis at later stages (Siegel et al.,2018). Thus, there is a need for a favorable treatment process allowing early detection of skin cancer is vital for the life of the patient.

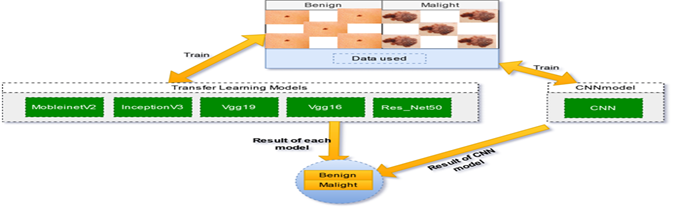

The following paper is organized as follow, in the next section we present materials and methods our architecture and the different transfer learning model used MobinetV2, incecptionV3, vgg19, vgg16, Resnet50 and CNN model. To compare each model, we calculate the accuracy. We discuss our result in the results and discussion section. Finally terminate with conclusion.

Materials and Methods

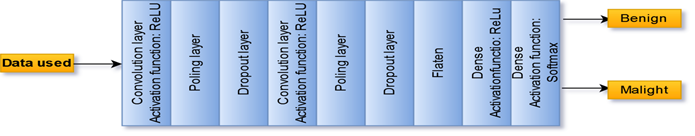

We proposed an architecture composed of transfer learning models and the CNN model. Transfer learning are MobileNetV2, InceptionV3, Vgg19, Vgg16, and ResNet50. Each model returns its accuracy. The data used has two classes: benign and malignant (Figure 4). The development environment is the Python framework. For model development, we used Sklearn, Keras, and TensorFlow libraries. We used Google Colab, which is a Cloud for the Google data science community gives us the ability to build complex and heavy machine learning and deep learning models without having to use limited machine resources (Bisong,.2019).

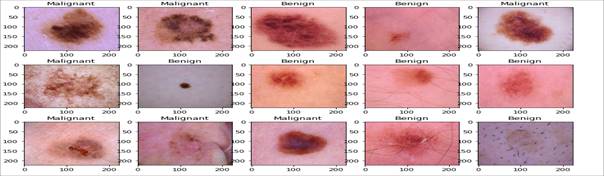

Data used

The data used are the data of the skin cancer in two classes benign and malignant. The set of images contains 2637 images of size 164 MB. It consists of two folders containing 2637 images (224x244).

The table shows the data contents:

Transfer learning models

Transfer learning (TL) is a machine learning (ML) research problem that focuses on storing the knowledge gained while solving a single problem and applying it to a different but related problem. Transitional learning is one of the most common techniques in computer vision and the deep field of learning to transfer knowledge from one domain to another. Transfer learning allows users to use predefined weights from another domain in case of computational power limitation (West et.,2007). Creating algorithms that facilitate transfer learning processes has become a goal for machine learning professionals, who strive to make machine learning as close as possible to human behavior. Machine learning algorithms are typically designed to handle one-off tasks. Transitional learning develops methods for transferring knowledge from one or more of these source tasks to improve learning by performing a similar target task. The goal of this transfer of learning strategies is to make machine learning evolve as efficiently as human learning (Basque et al.,2004). For image classification, key point detection, segmentation, and object detection, various Deep Transfer Learning (TL) based pre-trained models are used (Showkat et al,.2022; Ayana et al, 2022).

MobileNetV2 is a convolutional neural network architecture that seeks to work well on mobile devices. It is based on an inverted residual structure where the residual connections between the bottleneck layers. The intermediate expansion layer uses light convolutions in the depth direction to filter out entities as a source of non-linearity. As a whole, the MobileNetV2 architecture contains the initial fully convolutional layer with 32 filters, followed by 19 residual bottleneck layers (Sandler et al, 2018; Falconí et al, 2019)

Inception v3 is a widely used image recognition model that achieves an accuracy of over 78.1% on the ImageNet dataset. The model is the culmination of many ideas developed by several researchers over the years. It is based on the original paper: "Rethinking the Launch Architecture for Computer Vision" by Szegedy. The model itself is composed of symmetric and asymmetric building blocks, including convolutions, average pooling, maximum pooling, concats, dropouts, and fully connected layers. Batchnorm is used extensively throughout the model and applied to activation inputs. Loss is calculated via Softmax (Wang et al, 2019). VGG19 is a variant of the VGG model which in short consists of 19 layers (16 convolution layers, 3 fully connected layers, 5 MaxPooling layers and 1 SoftMax layer). There are other variants of VGG like VGG11, VGG16 and others. VGG19 has 19.6 billion FLOPs (Carvalho et al,.2017).

VGG16 is a convolutional neural network model proposed by K. Simonyan and A. Zisserman of Oxford University in the paper "VeryDeepConvolutional Networks for Large-Scale Image Recognition". The model reaches a test accuracy of 92.7% in the top 5 in ImageNet. It was one of the famous models submitted to ILSVRC-2014. It provides an improvement over AlexNet by replacing the large kernel-sized filters (11 and 5 in the first and second convolutional layers, respectively) with several 3 × 3 kernel-sized filters one after another. VGG16 was trained for weeks and used NVIDIA Titan Black GPUs (Simonyan et al, 2014; Tammina et al,.2019). ResNet is one of the most powerful deep neural networks that achieved fantastic performance results in the 2015 ILSVRC classification challenge. ResNet achieved excellent generalization performance on other recognition tasks and won first place on ImageNet detection, ImageNet localization, COCO detection, and COCO segmentation in the ILSVRC and COCO 2015 competitions. There are many variations of the ResNet architecture, i.e., the same concept but with a different number of layers. We have ResNet-18, ResNet-34, ResNet-50, ResNet-101, ResNet-110, ResNet-152, ResNet-164, ResNet-1202 etc. The name ResNet followed by a number of two or more digits simply implies the ResNet architecture with a certain number of neural network layers (He et al,.2016; Reddy et al, 2019)

Results and discussion

The training images are the data used, and the predicted classes are Benign or Malignant. To analyze all models, we have calculated the following metrics: Training loss: Calculated by the summation of the errors in training phase. The lower loss is better except when the model has over-fitting.

Accuracy: The percentage of correctly predicted data to all predicted data is known as classification accuracy in training phase.

Validation loss: The validation loss estimated in testing phase, it calculated by the summation of the errors during testing phase.

Validation accuracy: The validation accuracy is also estimated during the test phase. It is defined by the ratio between correctly predicted new data (data don’t used in training step) to all predicted data.

Our models are implemented with parameters in the table below:

Table 2 Parametters Used.

| Input shape | Learning rate | Epoch number | Batch size | Poling layer |

|---|---|---|---|---|

| (224,224,3) | 1e-5 | 50 | 64 | Average function |

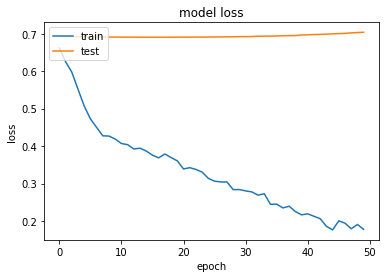

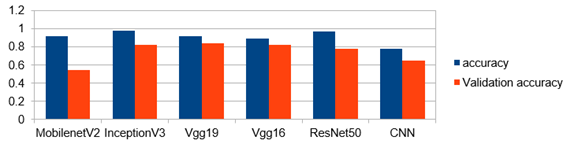

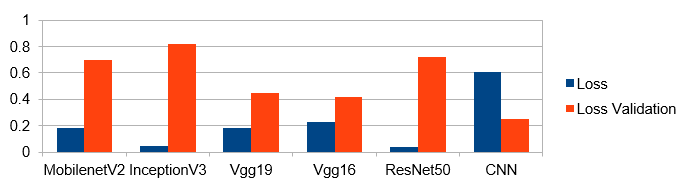

MobileNetV2 registered the following results: In epoch number 50 the training phase accuracy is 0.92 and validation accuracy is constant at 0.54 from the first epoch until epoch number 50 (Figure 7). The loss of training phase, in epoch number 1 is 0.67 and in epoch number 50 is 0.18. The validation loss of testing phase, in the first epoch 0.69 and in epoch 50 is 0.70 (Figure 8).

InceptionV3 results

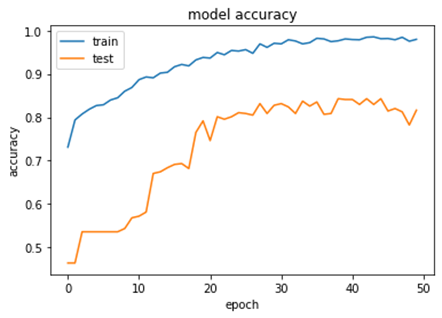

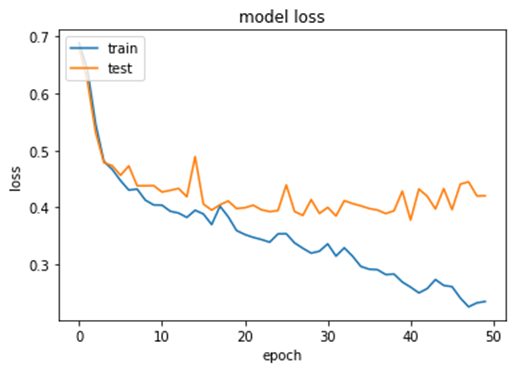

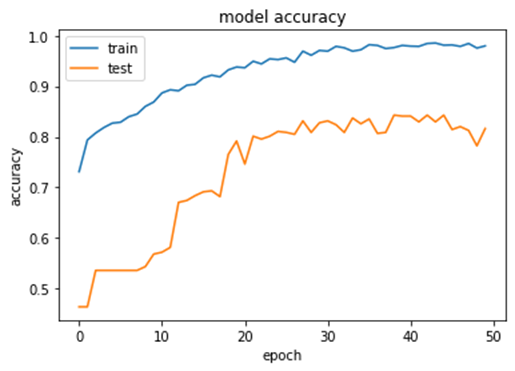

In the training phase accuracy is 0.98 and for testing phase validation accuracy is 0.82 (Figure 9). Loss in training phase is 0.048 and validation loss is 0.82 (Figure 10).

Vgg19 result

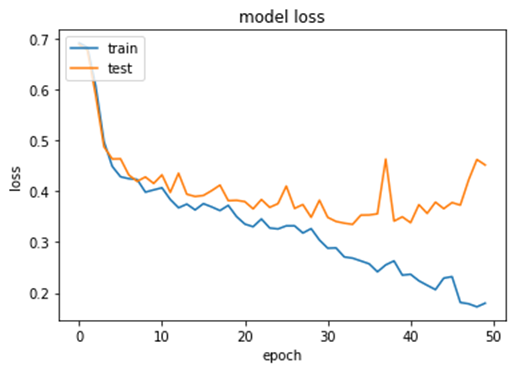

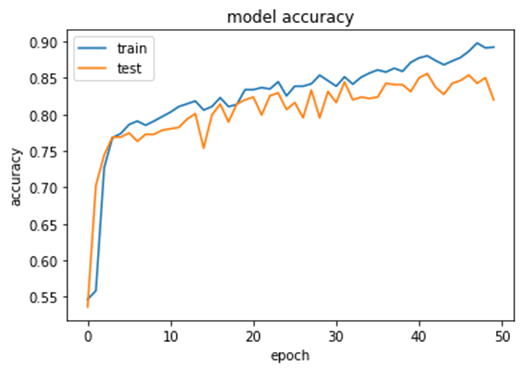

For the Vgg19 model, the accuracy in the training phase is 0.92 and the validation accuracy is 0.84 (Figure 11). The loss in training 0.18 and validation loss 0.45 (Figure 12).

Vgg16 result

Vgg16 model has an accuracy of 0.89 and a validation accuracy of 0.82 (Figure 13). Loss in this model is 0.23 and the validation loss 0.42 (Figure 14).

Res net 50

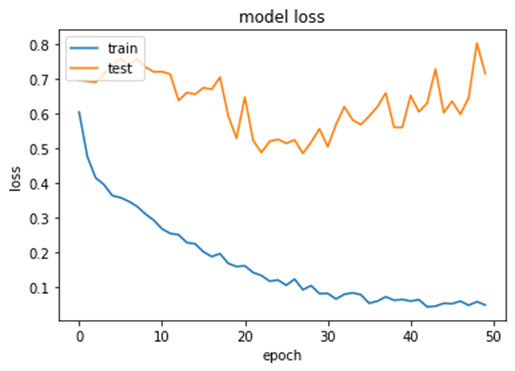

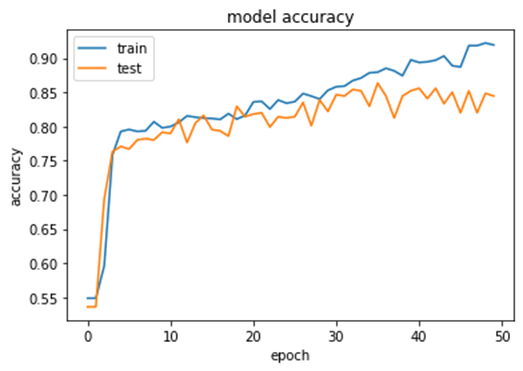

In training phase in epoch 50, the accuracy is 0.97 and for the testing phase the validation accuracy is 0.78 (Figure 15). The loss 0.04 and the validation loss is 0.72 (Figure 16).

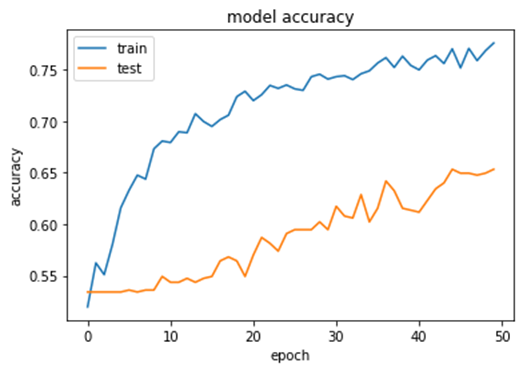

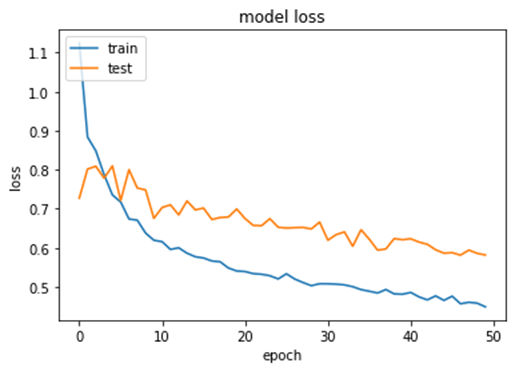

CNN result

In the training phase the accuracy is 0.78. The validation accuracy is 0.65 (Figure 17). The loss in training is 0.61 and the validation loss is 0.25 (Figure 18).

Results analysis

Results of models after 50 epochs, Best results are respectively for the training phase accuracy InceptionV3, Resnet 50, Vgg19, Vgg16, MobinetV2 and CNN . These results indicates that transfer learning models InceptionV3, MobinetV2, Vgg19 and Vgg16 outperform classification of skin cancer compared with CNN model. For the validation accuracy transfer learning models InceptionV3, Resnet 50, Vgg19, Vgg16 have best result compared with CNN model except MovinetV2 which has a constant validation accuracy. MobinetV2 has under-fit problem (Figure 19).

For the Loss in training phase, transfer learning models error is the minimum compared with CNN model. That mean transfer learning models are best than CNN model. For the validation loss although CNN model is the minimum but its validation accuracy is less than transfer learning models (Figure 20).

Result obtained illustrate the outperform of transfer learning models. The highest accuracy are nceptionV3, Resnet 50, Vgg19, Vgg16 and CNN model. Where the accuracy of InceptionV3 0.98 Resnet_50 0.97 Vgg19 0.92, Vgg16 0.89 and CNN model 0.78. For the MobinetV2 the model has under fit. We proposed a transfer learning approach base for skin cancer detection, the model was tested MobinetV2, InceptionV3, Vgg19, Vgg16, Resnet50. This proposition has the following advantages:

Restnet50 and InceptionV3 Outperform accuracy compared with baseline CNN.

Optimize the computation cost: Transfers learning model is trained model, so it doesn't need a full training time like CNN.

Solve the problem of low contrast between skin lesions and normal.

Distinguishing melanoma lesions from normal skin.

Differentiate in cases of variation in skin conditions, e.g., skin color.

Facilitates early detection of skin cancer.

We can conclude that transfer learning is simple and effective for the problems of image classification of skin cancer. Transfer learning remains a very important area for image recognition.

Conclusion

Malignant melanoma is the real cause of death from skin cancer. Although there are commonly used imaging and diagnostic techniques for skin cancer such as dermoscopy, automatic recognition remains challenging due to its difficult lesion and skin disease segmentation of micro-lesion areas and similarities between melanoma and non-melanoma. Our work offers an inevitable advantage to clinicians and patients in the rapid and early detection of this disease. Inception, Resnet_50, Vgg19 and Vgg16 transfer learning model can detect skin cancer with accuracy 0.98, 0.97, 0,92, 0.89 this indicates the importance of transfer learning. In the future work we will test other transfer learning method. On the other hand, we will work on other medical image data.